Around ten o’clock on the evening of March 18th, 2018, autonomous vehicle (AV) development was brought into the public eye in perhaps the most jarring example to date of how truly nascent this technology is, and the dangers posed by the current state of the art – systems that still require human involvement, yet, however unintentionally, discourage it. A Level 3 autonomous vehicle operated by Uber, with a human “emergency backup driver” behind the wheel, struck and killed a pedestrian on a Tempe, Arizona street. The pedestrian who was hit had been walking her bicycle along a sidewalk as the Uber vehicle approached, traveling at approximately 38mph in a 45pmh zone according to accident data reviewed and released by the Tempe police department. When the pedestrian stepped into the roadway (not, it should be noted, in a crosswalk) in an apparent attempt to cross, the Uber vehicle struck her at speed, neither braking nor steering to attempt collision avoidance. In a statement released after investigation into the incident began, Tempe chief of police Sylvia Moir indicated that Uber was likely not at fault, and that it would have been all but impossible to avoid this collision. I believe the first part of her statement is true, but on the second part, I have to respectfully disagree.

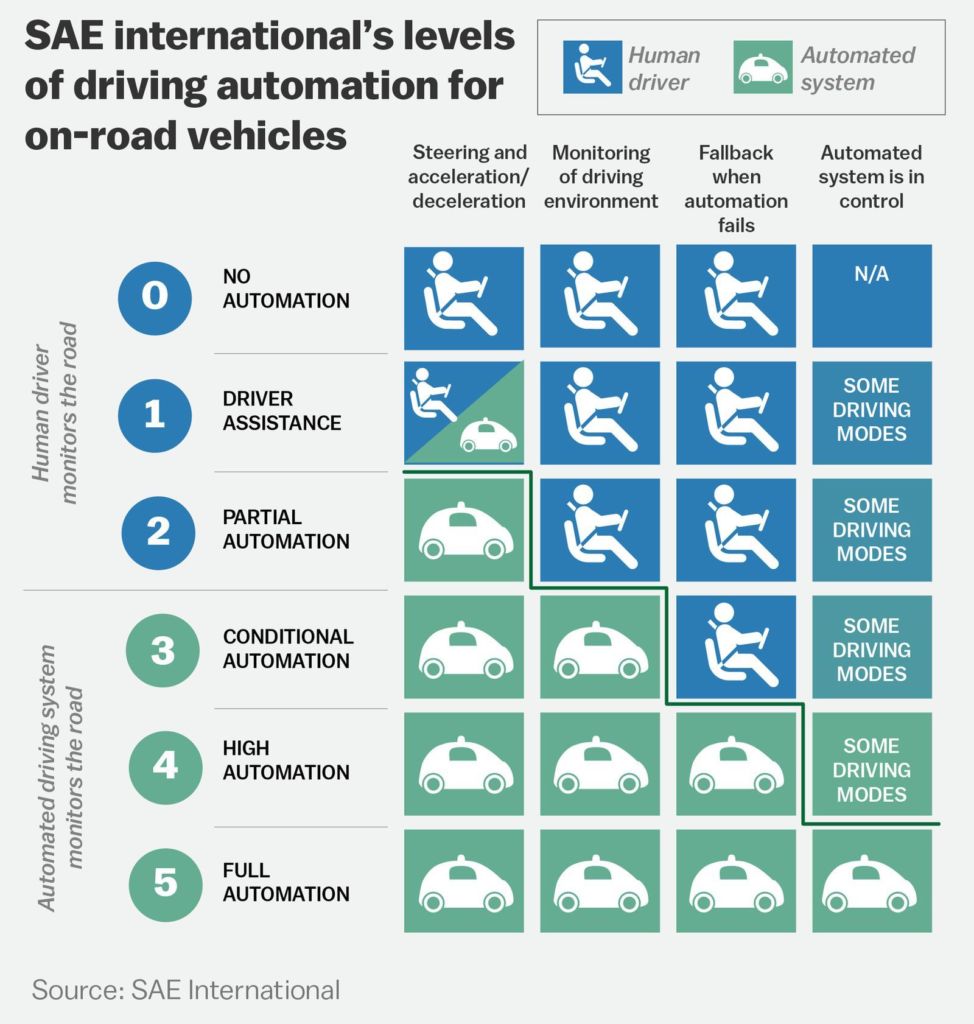

In order to understand the nature of this incident, it’s important to know what Level 3 autonomous driving really means. The National Highway Traffic Safety Administration (NHTSA) has officially adopted guidelines for classifying autonomous driving according to levels of human involvement; the guidelines were laid out by the Society of Automotive Engineers (SAE) in 2014 to clarify what exactly constitutes autonomous operation in its various forms. In layman’s terms, these are the classifications:

- Level 0: No autonomous functionality whatsoever; a traditional automobile operated exclusively by a human.

- Level 1: Most driving functions are handled by a human, but one specific function (such as accelerating and decelerating with an adaptive cruise control system) is handled by the automobile.

- Level 2: The automobile assumes all basic tasks (acceleration, braking, steering) associated with driving under some circumstances, but human intervention is required if the system miscalculates, and the human driver must monitor the vehicle and its surroundings at all times and be prepared to retake control.

- Level 3: The automobile assumes all basic tasks associated with driving, and also monitors the vehicle and its environment in order to respond to unplanned circumstances. Human involvement is theoretically only required in case of an emergency scenario in which the vehicle fails to respond properly to its environment.

- Level 4: The automobile assumes all driving tasks in specified environments (such as traffic jams) but a human driver is still required, and the vehicle must be capable of parking itself safely if the human driver is unable to retake control.

- Level 5: Full automation with no human input required; in all circumstances, the vehicle operates under its own control and can travel anywhere at any time without human occupants.

This infographic from Vox simplifies things a bit:

As should be apparent from these classifications, Levels 2, 3, and 4 all rely on human involvement, inversely proportional to the level of automation at play. Consequently, AVs with anything less than Level 5 technology are typically referred to as “semi-autonomous” vehicles. Level 1 automation has been around for a while, with systems like adaptive cruise control (using sensors to maintain a set distance from vehicles in front of the car in addition to maintaining a set speed) and self-parking (in which the vehicle steers while the driver accelerates and brakes) now considered mainstream. As a high level of driver involvement is required for these systems to operate, they have caused little impact on driving behavior; consequently, they have been regarded by the driving public as little more than luxury features. Move up a level from there, however, and real impact on the way people think about driving – and the way they perceive the nature of personal responsibility – is evident.

As should be apparent from these classifications, Levels 2, 3, and 4 all rely on human involvement, inversely proportional to the level of automation at play. Consequently, AVs with anything less than Level 5 technology are typically referred to as “semi-autonomous” vehicles. Level 1 automation has been around for a while, with systems like adaptive cruise control (using sensors to maintain a set distance from vehicles in front of the car in addition to maintaining a set speed) and self-parking (in which the vehicle steers while the driver accelerates and brakes) now considered mainstream. As a high level of driver involvement is required for these systems to operate, they have caused little impact on driving behavior; consequently, they have been regarded by the driving public as little more than luxury features. Move up a level from there, however, and real impact on the way people think about driving – and the way they perceive the nature of personal responsibility – is evident.

Arguably the first highly-visible collision involving a Level 2 AV occurred in Florida on May 7th, 2016. The vehicle in question was a Tesla Model S, equipped with the company’s Autopilot functionality, a Level 2 autonomy system capable of operating the automobile under limited circumstances such as an open, well-marked freeway under clear skies. Such were the conditions on the day that Joshua Brown’s Tesla struck a tractor trailer at highway speed. The truck was turning to cross the roadway at the time, and Brown’s Tesla had alerted him several times to retake control of the vehicle. He did not comply with these warnings, and ultimately his car collided with the tractor trailer at 74mph. He had set the cruise control of his vehicle at that speed less than two minutes prior to the crash.

Brown had engaged Autopilot; despite clear warnings by Tesla (the company) that its perhaps disingenuously-named Autopilot was a driver assistance system, not a substitute for situational awareness, and clear real-time alerts by Brown’s Tesla (the car) that human control of the vehicle was required, seven seconds elapsed between the time when the tractor trailer first should have been visible and the time when Brown’s car struck the tractor trailer, according to the NHTSA. They were to be seven of the last seconds of Brown’s life.

Back to Tempe. On the evening of March 21st, 2018, police released dash-cam footage from the Uber vehicle, a Volvo XC90 SUV modified to operate with Uber’s proprietary AV technology. The footage shows “emergency backup driver” Rafaela Vasquez looking down at her lap for nearly five seconds prior to the collision that claimed the life of 49-year-old Elaine Herzberg. Approximately 1.4 seconds elapsed from the time Herzberg became visible to cameras mounted in the Uber vehicle to the time of impact.

Instrumented testing of the Volvo XC90 shows that it is capable of stopping from 60 mph in 121 feet. 38 mph, the speed at which the Uber vehicle was traveling when it struck Herzberg, equates to 55.73 feet per second. Over the course of 1.4 seconds, a vehicle traveling at that rate of speed covers just over 78 feet. Not taking into account the reaction time of the driver, a typical vehicle traveling at 40 mph requires 80 feet to come to a complete stop. Had Vasquez been in full control of the Uber vehicle, and had she noticed and reacted to Herzberg just a half-second before she became visible to the cameras in the vehicle (not at all implausible according to some research) Herzberg might very well be alive today. This takes into account braking alone – Vasquez could presumably have taken additional evasive measures (steering) to avoid collision if she was in full control of the vehicle. In light of these circumstances, again, I have to disagree with Tempe police chief Moir’s earlier comment that this loss of life would have been nearly impossible to avoid.

In the final moments of the video released by Tempe police on March 21st, Vasquez is seen looking up from her lap and out through the windshield of the Uber vehicle. Immediately prior to the crash that killed Elaine Herzberg, a look that can only be described as one of horror came across Vasquez’s face as she realized what was about to occur.

Just five days after the Uber crash in Tempe, on March 23rd, a Tesla Model X struck a freeway divider on California’s Highway 101. The vehicle’s owner, Wei Huang, was behind the wheel at the time of the accident, with Autopilot engaged. According to a Tesla blog post released during investigation of the incident, “The driver had received several visual and one audible hands-on warning earlier in the drive and the driver’s hands were not detected on the wheel for six seconds prior to the collision. The driver had about five seconds and 150 meters of unobstructed view of the concrete divider… but the vehicle logs show that no action was taken.” Huang was pronounced dead at a nearby hospital soon after the crash.

Vehicles more advanced than Level 1 and less sophisticated than Level 5 full autonomy present a psychological quandary for their occupants. Our human brains have been trained for centuries to maintain situational awareness at all times, the instinct to avoid danger being deeply ingrained in us as it is with virtually all species. Yet behind the wheel of a vehicle with a system as limited as Tesla’s Autopilot or as broadly capable – at least in theory – as Uber’s present technology, lethal events, as well as many more incidents less dire, show that we infer a much greater degree of safety from semi-autonomous driving systems than reality should dictate. We are lulled into a false sense of security, paradoxically relaxing our situational awareness under circumstances that arguably demand far more vigilance, not less.

While Uber has temporarily suspended testing of its AVs in Arizona in the aftermath of the Tempe collision, as well as in San Francisco, Toronto, and Pittsburgh, the march of progress in developing this technology shows no signs of stopping amid regulatory climates shifting rapidly to its favor. Just a few weeks prior to the crash that claimed Elaine Herzberg’s life, Arizona governor Doug Ducey signed an executive order allowing autonomous vehicles to be tested in the state without human backup drivers behind the wheel.

It would be cynical to assume that corporations developing AV technology are deliberately exposing humans to avoidable risk in the interest of racking up real-world testing miles to inform product improvement. And to their credit, even before the incidents described in this article occurred, many automakers publicly stated that they would not sell autonomous vehicles to the public until full autonomy has been achieved, in the interest of public safety. (Volvo, for its part, voluntarily stated that it would accept full liability for accidents involving its autonomous vehicles as early as 2015; it is reasonable to assume that Uber’s modifications to their XC90s circumvent manufacturer liability.) These companies are not testing vehicles on public roads as Uber has – and likely will again soon. It would be foolish, however, to assume that such testing has claimed its last fatality, to say nothing of injuries less than mortal. If neither regulators nor technology companies are willing to act in the interest of the public while avoidable risk increases on public roads, there is an alternative measure available – and it is in the hands of organizations notably averse to risk.

The insurance industry is in a position to mitigate the dangers of autonomous driving systems less sophisticated than the SAE’s Level 5. As Jeff Wargin, VP of product management at Duck Creek Technologies, put it: “When insurers have access to non-approximated data – data about the actual risk, the actual driver, and what they’re actually doing and where they’re actually going when they drive, such as from telematics devices – they have a much clearer picture of what they’re insuring, and what that should cost.” In light of the dangers posed by systems that, if only tacitly or subversively, encourage distracted driving, the responsible action on the part of insurers should start with pricing personal and commercial auto policies accordingly, assigning higher premiums to vehicles with autonomous tech at Levels 2, 3, and 4. This is already the industry’s standard practice for drivers convicted of driving under the influence of alcohol or drugs; if such drivers are able to secure coverage at all following a DUI, they can reliably expect their premiums to skyrocket, and for good cause.

However, this is a reactive measure only. Insurers cannot predict that a given individual is more likely than another to drive under the influence. With semi-autonomous vehicles, however, it is obvious from real-world examples that distracted driving (a term generously applied to situations when humans behind the wheel are completely disengaged from the work of actually driving) presents a real, potentially lethal, and entirely avoidable level of risk. And one that can be predicted and measured very accurately. Some in the insurance industry are already proposing measures to address the issue; as Jack Stewart wrote in Wired, “The Association of British Insurers suggests a simple, two-stage, classification for [autonomous] cars – assisted or automated – and says international regulators should get on board. Under its proposal, an “automated” car is capable of driving itself in virtually all situations, come to a stop safely if it cannot drive itself, avoid every conceivable crash, and continue working even if something in the system fails.” Given that most automotive industry analysts predict that such “automated” cars won’t be a reality for at least a decade, this standard would certainly make it easier for insurers to classify and address the risk associated with “assisted” vehicles.

The property and casualty insurance industry can use this reality as an opportunity to act as a force of good for public safety. Simply pricing auto policies for level 2, 3, and 4 semi-autonomous vehicles similarly to their manually-operated counterparts is tantamount to a flagrant act of adverse selection. Vehicles that use partial AI solutions and partial human intervention introduce a new risk that can’t be properly accounted for using traditional models. Accordingly, the industry can take a multi-pronged approach to combat the dangers inattentive drivers face behind the wheels of semi-autonomous vehicles. First, as I mentioned above, set higher premiums for vehicles that don’t properly address the risk associated with an entirely new level of driver distraction; this seems entirely rational based on the precedent set by human perception of responsibility (or lack thereof) behind the wheel of such vehicles. Automatically assigning 100% fault to drivers involved in crashes caused during semi-autonomous travel hardly seems unreasonable as well. Second, directly lobby the auto industry and its regulators, encouraging all parties to adopt the classification system proposed by the Association of British Insurers and to add more fail-safe mechanisms, such as a process that accounts for the possibility of a failed handoff between vehicle and driver, until Level 5 autonomy can be realized on public roads. And third, introduce new insurance products that specifically cover losses associated with the transition between AI and human operation. Such a combined approach can play a significant role in rewarding both behaviors and technologies that fight back against this new and growing form of risk.

A recent research report from KPMG refers to the current state of autonomous vehicle development as “the chaotic middle” of the process, in terms of the insurance industry. It’s time for the industry to respond to this chaos with a reasoned, and reasonable, response. Treat any vehicle that offers semi-autonomous driving systems that qualify for Level 2, 3, or 4 classification as the carrier of risk that it clearly is; being figuratively asleep at the wheel is every bit as dangerous as being literally asleep at the wheel, and people need to understand that operating a motor vehicle of any type is their own very serious responsibility.

If some good is to come from the collisions that took the lives of Elaine Herzberg, Wei Huang, and Joshua Brown, the insurance industry has the power to make it happen. It’s simply the right thing to do.